We all know how dependent we have become in Europe on US cloud providers. We know about the risks of this in the current political climate. And yet we keep using more and more US cloud services. Read Bert Hubert’s writings about the European cloud situation.

And to be honest, when customers ask for advice on starting a new data engineering ecosystem, Azure Fabric and Databricks are on the top of my list.

But while it might be hard to switch from Office 365 to open source solutions (especially moving all your users to these unknown platforms), in the data engineering landscape there are so many widely adopted open source solutions. Solutions that end users rarely need to deal with directly. Couldn’t we run these products somewhere else? So I went on an investigation.

Let’s build a lakehouse

The plan is to go data engineering in the European cloud. What are we going to need?

Well a simple data warehouse on a regular relational database can be built almost anywhere. You didn’t know? Check these European cloud providers. They almost all of them provide a managed PostgreSQL database. Ready with a click of a button.

But let’s take it a little further. A lot of organisations think they need Azure Fabric for data engineering. Azure Fabric provides “an end-to-end analytics and data platform designed for enterprises that require a unified solution”.

It has (and this is not an exhaustive list):

- A data lake/lakehouse for storing data. This can be unstructured or structured data.

- A SQL database for storing more data, in a relational way.

- Data Factory (or rather, a dressed down version of Azure Data Factory) for automation.

- Luckily it also has Jupyter Notebooks for automation.

- Apache Spark for data engineering and data science

- Power BI for analytics.

Can we build something like this with just open source products? I think we can start by building a data lakehouse. That is entirely possible with the following open source products.

- Kubernetes

- Object storage

- Open table format

- Apache Spark

Other things on the wish list might be:

- Code free data pipelines (similar to Azure Data Factory)

- Analytics solutions.

Kubernetes

Containers are a great way to (build and) ship applications. Kubernetes is a container orchestration solution. Anything you can run or build in a Docker container, you can run in Kubernetes. If we have Kubernetes running, we can get applications running that we’ll need in our data lakehouse.

Object storage

This is comparable to AWS’ S3 storage. It is often used in data lake and lakehouse solutions. It is also relatively cheap.

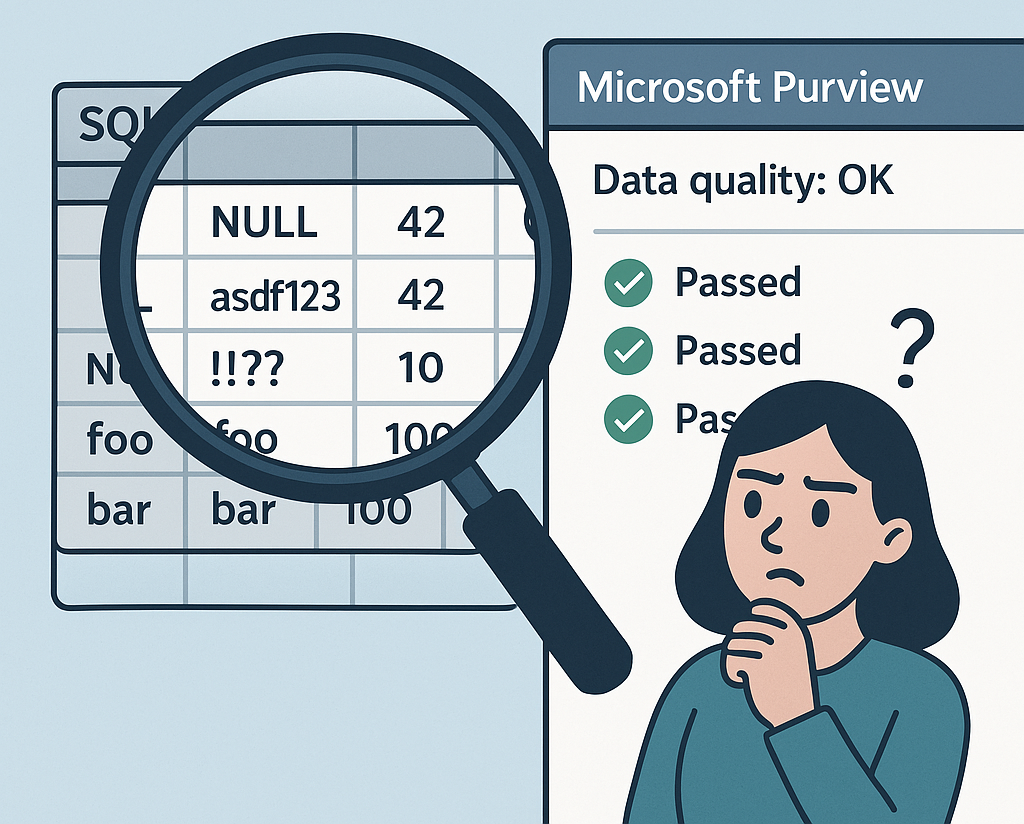

Open table formats

Modern data lakehouses use Parquet as file format and built on top of that open table formats like Delta Lake, Apache Iceberg or Apache Hudi. These allow you to create a data warehouse with relational database-like tables that you can talk SQL to.

We can run this from a container in Kubernetes.

Apache Spark

Spark is often used for advanced data pipelines. You can do transformations with it and Spark also understands SQL. Add Jupyter Notebooks in there and data engineers and data scientists can start using the data lakehouse. Spark also can run on a container in Kubernetes.

Code free data pipelines

If we really are going to measure our solution with Azure Fabric, we’re also going to need some Azure Data Factory type of solution. Azure Data Factory allows you to build data pipelines in a visual interface. Note though that last time I checked Azure Fabric’s Data Factory was a dressed down version of Azure Data Factory. It had limitations. So it is reasonable if our solution has some limitations itself.

Possible solutions might be:

- Apache Airflow with Astronomer UI

- Meltano

- Dagster

- Apache Nifi

Analytics solutions

It’s actually hard to beat PowerBI. But if PowerBI is willing to connect to our data lakehouse (as it will be a normal data lakehouse built on regular products), this should work.

First let’s get our Kubernetes and object storage. We can build almost anything else from there. But before we get any further…

The rules

Before we go any further, let’s lay down some rules for our data lakehouse project.

These rules are:

- A true sovereign solution.

- Cloud solutions, not a hosting solution.

- Limited complexity.

- Enterprise level security offering

A true sovereign solution

Basically this means that when Trump wants to turn the data solution off or spy on the data, he can’t (without hacking the systems). This rules out so called sovereign solutions offered by US cloud providers. Recently it became quite clear that for example Microsoft, can’t offer this. And neither I expect other US cloud providers.

Cloud solutions, not hosting solutions

We will be looking at some cloud providers, but there will be other providers who will say “but we provide that too”. Yes, but do you host or do you do cloud?

In other words, after I create an account on your site, can I enter my credit card details, go to your Kubernetes/PostgreSQL/object storage offerings and immediately create it?

Or do I have to “call now for our attractive rates”? Do I first need to call your sales representative? That will be a no go. That is not cloud.

Limited complexity

Building this solution should not be overly complex. It should not take me 2 weeks to build the whole data lakehouse setup (because I don’t have 2 weeks).

Enterprise level security

A badly secured data ecosystem is nice for my own projects or open data, but the data lakehouse I envision should have security options that are useful for companies and governments who want to build their own data lakehouse in the European cloud.

What do the European cloud providers offer?

While Europe hasn’t been able to keep up with all the AI “enriched” cloud solutions, it is not like they only are capable to host a few servers. Let’s see what they offer.

The European Alternatives site helps us here. Strangely enough the site does have categories for Kubernetes and object storage, but not for databases. But don’t worry, all the companies that offer the first two, do (of course) also offer databases. Usually they offer all three. Let’s take a look at a couple of them.

Scaleway

Scaleway is a French cloud provider and when you look at the site, it actually looks pretty familiar if you are a regular at cloud providers. They do have Kubernetes, object storage and PostgreSQL (managed and serverless). In fact they also have non relational databases like MongoDB and Redis. And talking data engineering: they have a managed Data Warehouse for Clickhouse. Also they offer messaging and queuing solutions. So you will find Kafka here.

If you thought that European cloud providers are too far behind to do AI or offer GPU, think again. They even offer a quantum computing solution.

Pricing is precisely as you’d expect from a cloud provider. And they have a cost manager and even an overview of your environmental footprint. You get 52 days of free usage (as far as I remember).

Gridscale

Gridscale is a German cloud provider. They offer managed Kubernetes, databases and object storage. I don’t see a lot of enterprise security solutions. So I’ll have to see how that plays out.

OVHcloud

OVHcloud is another French cloud provider. To my surprise they even offer the site in Dutch. They do offer Kubernetes, PostgreSQL and object storage. They also offer non relational databases like MongoDB and OpenSearch. They have Kafka solutions. And: they already offer data lakehouse solutions! They call it Lakehouse Manager and it is based on Apache Iceberg. Precisely what I have planned to use. They also have a data catalog.

The pricing is visible on the site. So that’s also according to my liking. They could do with a UX makeover, because OVHcloud’s site is a bit confusing sometimes.

There are more providers

European Alternatives lists 10 other providers of Kubernetes. I wasn’t able to check them all out. I went with the big ones first. But if I have more time I’m happy to try more providers out.

What’s next?

I have decided to start with building my data lakehouse on Scaleway. I’ll let you know how it went in Part 2 of this series.

2 Comments

Data engineering in the European cloud – Part 2: Scaleway – Expedition Data · January 7, 2026 at 4:25 pm

[…] Part 2 in a series where I try to create a data engineering environment in the European cloud. In Part 1 I described my plan for creating a data lakehouse in the European cloud. Now it’s time to get […]

Data engineering in the European cloud – Part 3: Adding data and visualisations – Expedition Data · January 16, 2026 at 10:59 pm

[…] is Part 3 of my Data engineering in the European cloud series. In Part 1 I described my plan for creating a data lakehouse in the European cloud. In Part 2 I got a data […]