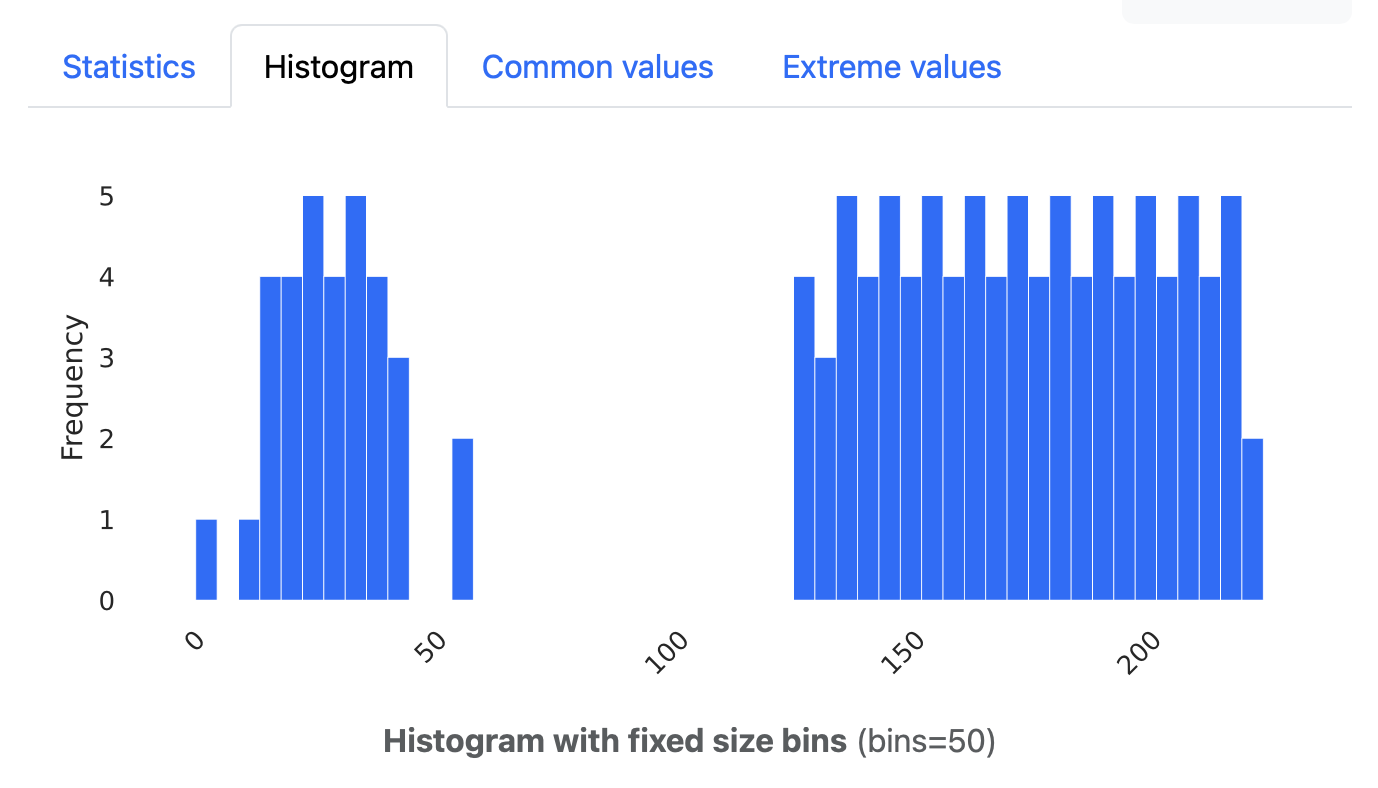

Profiling data with ydata in PySpark

When you got a dataset to explore, there are several ways to do that in PySpark. You can do a describe or a summary. But if you want something a little more advanced, and if you want to get a bit of a view of what is in there, you might want to go data profiling.

Older documentation might point you to Pandas profiling, but this functionality is now part of the Python package ydata-profiling (which is imported as ydata_profiling).

I’ve been following this blog on starting with ydata-profiling:

https://www.databricks.com/blog/2023/04/03/pandas-profiling-now-supports-apache-spark.html

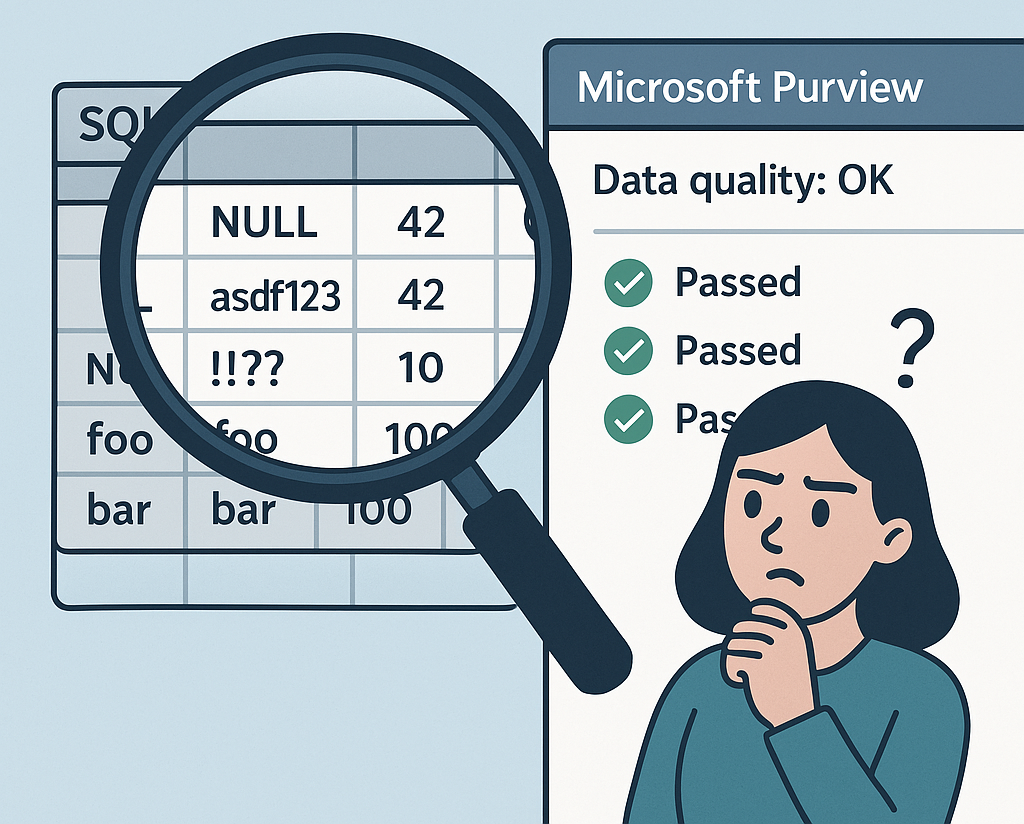

Getting ydata-profiling to work is not exactly a walk in the park. You’d think you can just feed it your messy dataset and it will show you what the data is like. But I encountered some problems:

- I got errors about missing Python packages in some situations.

- ydata doesn’t seem to like dataframes with only string columns.