Data engineering in the European cloud – Part 2: Scaleway

This is Part 2 in a series where I try to create a data engineering environment in the European cloud. In Part 1 I described my plan for creating a data lakehouse in the European cloud. Now it’s time to get our hands dirty. We’re going to do this in the Scaleway cloud.

The architecture

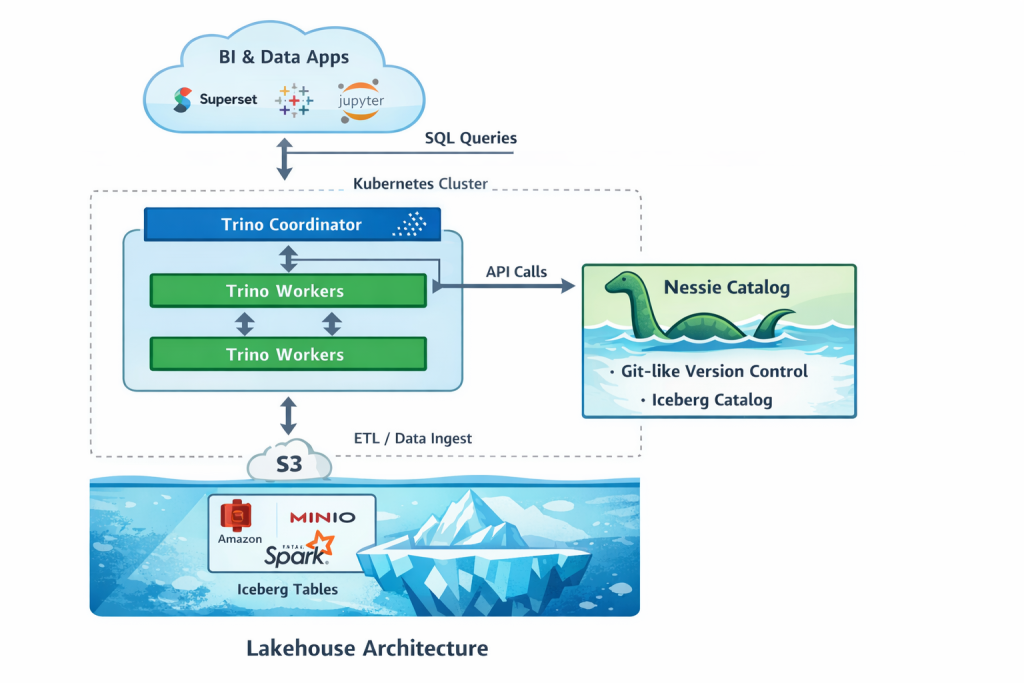

To get this data lakehouse running we will create a Kubernetes cluster and object storage for our data storage. In Kubernetes we can run containerised applications that will run our data lakehouse. I’ve consulted ChatGPT for this architecture. It had a better and more modern solution than I originally had in mind.

We’re going to use the Apache Iceberg open table format. This will allow us to create database like tables based on Parquet formatted files. Nessie will be the Iceberg data catalog (Hive Metastore was another option). It allows our data solutions to find the Iceberg tables and underlying Parquet files.

Trino will be the query engine. That will be the fastest way to get our first queries going.

(more…)